This is the multi-page printable view of this section.

Click here to print.

Return to the regular view of this page.

Installation Guide

Installing Kiali for production.

This section describes the production installation methods available for Kiali.

The recommended way to deploy Kiali is via the Kiali Operator, either using Helm Charts or OperatorHub.

The Kiali Operator is a Kubernetes Operator

and manages your Kiali installation. It watches the Kiali Custom Resource

(Kiali CR), a YAML file that holds the deployment configuration.

It is only necessary to install the Kiali Operator once. After the

operator is installed you only need to

create or edit the Kiali CR.

Never manually

edit resources created by the Kiali Operator.

If you previously installed Kiali via a different mechanism, you

must first uninstall Kiali using the original mechanism’s uninstall procedures.

There is no migration path between older installation mechanisms and the

install mechanisms explained in this documentation.

1 - Prerequisites

Hardware and Software compatibility and requirements.

Istio

Before you install Kiali you must have already installed Istio along with its

telemetry storage addon (i.e. Prometheus). You might also consider installing

Istio’s optional tracing addon (i.e. Jaeger) and optional Grafana addon but

those are not required by Kiali. Refer to the

Istio documentation for details.

Enable the Debug Interface

Like istioctl, Kiali makes use of Istio’s port 8080 “Debug Interface”. Despite the naming, this is required for accessing the status of the proxies

and the Istio registry.

The ENABLE_DEBUG_ON_HTTP setting controls the relevant access. Istio suggests to disable this for security, but Kiali requires ENABLE_DEBUG_ON_HTTP=true,

which is the default.

For more information, see the Istio documentation.

Version Compatibility

Each Kiali release is tested against the most recent Istio release. In general,

Kiali tries to maintain compatibility with older Istio releases and Kiali

versions later than those posted in the below table may work, but such

combinations are not tested and will not be supported. Known incompatibilities

are noted in the compatibility table below.

It is always recommended that users run a supported version of Istio.

The Istio news page posts end-of-support (EOL)

dates. Supported Kiali versions include only the Kiali versions associated with

supported Istio versions.

| Istio |

Kiali Min |

Kiali Max |

Notes |

| 1.24 |

2.0.0 |

|

|

| 1.23 |

1.87.0 |

2.0.0 |

Kiali v2 requires Kiali v1 non-default namespace management (i.e. accessible_namespaces) to migrate to Discovery Selectors. |

| 1.22 |

1.82.0 |

1.86.1 |

Kiali v1.86 is the recommended minimum for Istio Ambient users. v1.22 is required starting with Kiali v1.86.1. |

| 1.21 |

1.79.0 |

1.81.0 |

Kiali 1.82 - 1.86.0 may work, but v1.22 is required starting with Kiali 1.86.1. |

| 1.20 |

1.76.0 |

1.78.0 |

Istio 1.20 is out of support. |

| 1.19 |

1.72.0 |

1.75.0 |

Istio 1.19 is out of support. |

| 1.18 |

1.67.0 |

1.73.0 |

Istio 1.18 is out of support. |

| 1.17 |

1.63.2 |

1.66.1 |

Istio 1.17 is out of support. Avoid 1.63.0,1.63.1 due to a regression. |

| 1.16 |

1.59.1 |

1.63.2 |

Istio 1.16 is out of support. Avoid 1.62.0,1.63.0,1.63.1 due to a regression. |

| 1.15 |

1.55.1 |

1.59.0 |

Istio 1.15 is out of support. |

| 1.14 |

1.50.0 |

1.54 |

Istio 1.14 is out of support. |

| 1.13 |

1.45.1 |

1.49 |

Istio 1.13 is out of support. |

| 1.12 |

1.42.0 |

1.44 |

Istio 1.12 is out of support. |

| 1.11 |

1.38.1 |

1.41 |

Istio 1.11 is out of support. |

| 1.10 |

1.34.1 |

1.37 |

Istio 1.10 is out of support. |

| 1.9 |

1.29.1 |

1.33 |

Istio 1.9 is out of support. |

| 1.8 |

1.26.0 |

1.28 |

Istio 1.8 removes all support for mixer/telemetry V1, as does Kiali 1.26.0. Use earlier versions of Kiali for mixer support. |

| 1.7 |

1.22.1 |

1.25 |

Istio 1.7 istioctl no longer installs Kiali. Use the Istio samples/addons for quick demo installs. Istio 1.7 is out of support. |

| 1.6 |

1.18.1 |

1.21 |

Istio 1.6 introduces CRD and Config changes, Kiali 1.17 is recommended for Istio < 1.6. |

Maistra Version Compatibility

| Maistra |

SMCP CR |

Kiali |

Notes |

| 2.6 |

2.6 |

1.73 |

Using Maistra 2.6 to install service mesh control plane 2.6 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.6 |

2.5 |

1.73 |

Using Maistra 2.6 to install service mesh control plane 2.5 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.6 |

2.4 |

1.65 |

Using Maistra 2.6 to install service mesh control plane 2.4 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.5 |

2.5 |

1.73 |

Using Maistra 2.5 to install service mesh control plane 2.5 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.5 |

2.4 |

1.65 |

Using Maistra 2.5 to install service mesh control plane 2.4 requires Kiali Operator v1.73. Other versions are not compatible. |

| 2.4 |

2.4 |

1.65 |

Using Maistra 2.4 to install service mesh control plane 2.4 requires Kiali Operator v1.65. Other versions are not compatible. |

| n/a |

2.3 |

n/a |

Service mesh control plane 2.3 is out of support. |

| n/a |

2.2 |

n/a |

Service mesh control plane 2.2 is out of support. |

| n/a |

2.1 |

n/a |

Service mesh control plane 2.1 is out of support. |

| n/a |

2.0 |

n/a |

Service mesh control plane 2.0 is out of support. |

| n/a |

1.1 |

n/a |

Service mesh control plane 1.1 is out of support. |

| n/a |

1.0 |

n/a |

Service mesh control plane 1.0 is out of support. |

OpenShift Console Plugin (OSSMC) Version Compatibility

Kiali server with the same version of OSSMC plugin must be installed previously in your OpenShift cluster.

| OpenShift |

OSSMC Min |

OSSMC Max |

Notes |

| 4.15+ |

1.84 |

|

|

| 4.12+ |

1.73 |

1.83 |

All OSSMC versions from v1.73 to v1.83 are only compatible with Kiali server v1.73 |

OpenShift Service Mesh Version Compatibility

OpenShift

If you are running Red Hat OpenShift Service Mesh (OSSM), use only the bundled version of Kiali.

OSSM |

Kiali |

Notes |

| 2.6 |

1.73 |

Same version as 2.5 |

| 2.5 |

1.73 |

|

| 2.4 |

1.65 |

|

| 2.3 |

1.57 |

OSSM 2.3 is out of support |

| 2.2 |

1.48 |

OSSM 2.2 is out of support |

Browser Compatibility

Kiali requires a modern web browser and supports the last two versions of Chrome, Firefox, Safari or Edge.

Hardware Requirements

Any machine capable of running a Kubernetes based cluster should also be able

to run Kiali.

However, Kiali tends to grow in resource usage as your cluster grows. Usually

the more namespaces and workloads you have in your cluster, the more memory you

will need to allocate to Kiali.

OpenShift

If you are installing on OpenShift, you must grant the cluster-admin role to the user that is installing Kiali. If OpenShift is installed locally on the machine you are using, the following command should log you in as user system:admin which has this cluster-admin role:

$ oc login -u system:admin

For most commands listed on this documentation, the Kubernetes CLI command kubectl is used to interact with the cluster environment. On OpenShift you can simply replace kubectl with oc, unless otherwise noted.

Google Cloud Private Cluster

Private clusters on Google Cloud have network restrictions. Kiali needs your cluster’s firewall to allow access from the Kubernetes API to the Istio Control Plane namespace, for both the 8080 and 15000 ports.

To review the master access firewall rule:

gcloud compute firewall-rules list --filter="name~gke-${CLUSTER_NAME}-[0-9a-z]*-master"

To replace the existing rule and allow master access:

gcloud compute firewall-rules update <firewall-rule-name> --allow <previous-ports>,tcp:8080,tcp:15000

Istio deployments on private clusters also need extra ports to be opened. Check the

Istio installation page for GKE to see all the extra installation steps for this platform.

2 - Install via Helm

Using Helm to install the Kiali Operator or Server.

Introduction

Helm is a popular tool that lets you manage Kubernetes

applications. Applications are defined in a package named Helm chart, which

contains all of the resources needed to run an application.

Kiali has a Helm Charts Repository at

https://kiali.org/helm-charts. Two Helm

Charts are provided:

- The

kiali-operator Helm Chart installs the Kiali operator which in turn

installs Kiali when you create a Kiali CR.

- The

kiali-server Helm Chart installs a standalone Kiali without the need of

the Operator nor a Kiali CR.

The kiali-server Helm Chart does not provide all the functionality that the Operator

provides. Some features you read about in the documentation may only be available if

you install Kiali using the Operator. Therefore, although the kiali-server Helm Chart

is actively maintained, it is not recommended and is only provided for convenience.

If using Helm, the recommended method is to install the kiali-operator Helm Chart

and then create a Kiali CR to let the Operator deploy Kiali.

Make sure you have the helm command available by following the

Helm installation docs.

Helm version 3.10 is the minimum required Helm version. Older versions will not work. Newer versions have not been tested.

Adding the Kiali Helm Charts repository

Add the Kiali Helm Charts repository with the following command:

$ helm repo add kiali https://kiali.org/helm-charts

All helm commands in this page assume that you added the Kiali Helm Charts repository as shown.

If you already added the repository, you may want to update your local cache to

fetch latest definitions by running:

$ helm repo update

Installing Kiali using the Kiali operator

This installation method gives Kiali access to existing namespaces as

well as namespaces created later. See

Namespace Management for more information.

Once you’ve added the Kiali Helm Charts repository, you can install the latest

Kiali Operator along with the latest Kiali server by running the following

command:

$ helm install \

--set cr.create=true \

--set cr.namespace=istio-system \

--set cr.spec.auth.strategy="anonymous" \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

The --namespace kiali-operator and --create-namespace flags instructs to

create the kiali-operator namespace (if needed), and deploy the Kiali

operator on it. The --set cr.create=true and --set cr.namespace=istio-system flags instructs to create a Kiali CR in the

istio-system namespace. Since the Kiali CR is created in advance, as soon as

the Kiali operator starts, it will process it to deploy Kiali. After Kiali has started,

you can access Kiali UI through ‘http://localhost:20001’ by executing

kubectl port-forward service/kiali -n istio-system 20001:20001

because of --set cr.spec.auth.strategy="anonymous". But realize that anonymous mode will allow anyone to be able to see and use Kiali. If you wish to require users to authenticate themselves by logging into Kiali, use one of the other auth strategies.

The Kiali Operator Helm Chart is configurable. Check available options and default values by running:

$ helm show values kiali/kiali-operator

You can pass the --version X.Y.Z flag to the helm install and helm show values commands to work with a specific version of Kiali.

The kiali-operator Helm Chart mirrors all settings of the Kiali CR as chart

values that you can configure using regular --set flags. For example, the

Kiali CR has a spec.server.web_root setting which you can configure in the

kiali-operator Helm Chart by passing --set cr.spec.server.web_root=/your-path

to the helm install command.

For more information about the Kiali CR, see the Creating and updating the Kiali CR page.

Operator-Only Install

To install only the Kiali Operator, omit the --set cr.create and

--set cr.namespace flags of the helm command previously shown. For example:

$ helm install \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

This will omit creation of the Kiali CR, which you will need to create later to install Kiali Server. This

option is good if you plan to do large customizations to the installation.

Installing Multiple Instances of Kiali

By installing a single Kiali operator in your cluster, you can install multiple instances of Kiali by simply creating multiple Kiali CRs. For example, if you have two Istio control planes in namespaces istio-system and istio-system2, you can create a Kiali CR in each of those namespaces to install a Kiali instance in each control plane.

If you wish to install multiple Kiali instances in the same namespace, or if you need the Kiali instance to have different resource names than the default of kiali, you can specify spec.deployment.instance_name in your Kiali CR. The value for that setting will be used to create a unique instance of Kiali using that instance name rather than the default kiali. One use-case for this is to be able to have unique Kiali service names across multiple Kiali instances in order to be able to use certain routers/load balancers that require unique service names.

Since the

spec.deployment.instance_name field is used for the Kiali resource names, including the Service name, you must ensure the value you assign this setting follows the

Kubernetes DNS Label Name rules. If it does not, the operator will abort the installation. And note that because Kiali uses this as a prefix (it may append additional characters for some resource names) its length is limited to 40 characters.

Standalone Kiali installation

To install the Kiali Server without the operator, use the kiali-server Helm Chart:

$ helm install \

--namespace istio-system \

kiali-server \

kiali/kiali-server

The kiali-server Helm Chart mirrors all settings of the Kiali CR as chart

values that you can configure using regular --set flags. For example, the

Kiali CR has a spec.server.web_fqdn setting which you can configure in the

kiali-server Helm Chart by passing the --set server.web_fqdn flag as

follows:

$ helm install \

--namespace istio-system \

--set server.web_fqdn=example.com \

kiali-server \

kiali/kiali-server

Upgrading Helm installations

If you want to upgrade to a newer Kiali version (or downgrade to older

versions), you can use the regular helm upgrade commands. For example, the

following command should upgrade the Kiali Operator to the latest version:

$ helm upgrade \

--namespace kiali-operator \

--reuse-values \

kiali-operator \

kiali/kiali-operator

WARNING: No migration paths are provided. However, Kiali is a stateless

application and if the helm upgrade command fails, please uninstall the

previous version and then install the new desired version.

By upgrading the Kiali Operator, existent Kiali Server installations

managed with a Kiali CR will also be upgraded once the updated operator starts.

Managing configuration of Helm installations

After installing either the kiali-operator or the kiali-server Helm Charts,

you may be tempted to manually modify the created resources to modify the

installation. However, we recommend using helm upgrade to update your

installation.

For example, assuming you have the following installation:

$ helm list -n kiali-operator

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kiali-operator kiali-operator 1 2021-09-14 18:00:45.320351026 -0500 CDT deployed kiali-operator-1.40.0 v1.40.0

Notice that the current installation is version 1.40.0 of the

kiali-operator. Let’s assume you want to use your own mirrors of the Kiali

Operator container images. You can update your installation with the following

command:

$ helm upgrade \

--namespace kiali-operator \

--reuse-values \

--set image.repo=your_mirror_registry_url/owner/kiali-operator-repo \

--set image.tag=your_mirror_tag \

--version 1.40.0 \

kiali-operator \

kiali/kiali-operator

Make sure that you specify the --reuse-values flag to take the

configuration of your current installation. Then, you only need to specify the

new settings you want to change using --set flags.

Make sure that you specify the --version X.Y.Z flag with the

version of your current installation. Otherwise, you may end up upgrading to a

new version.

Uninstalling

Removing the Kiali operator and managed Kialis

If you used the kiali-operator Helm chart, first you must ensure that all

Kiali CRs are deleted. For example, the following command will agressively

delete all Kiali CRs in your cluster:

$ kubectl delete kiali --all --all-namespaces

The previous command may take some time to finish while the Kiali operator

removes all Kiali installations.

Then, remove the Kiali operator using a standard helm uninstall command. For

example:

$ helm uninstall --namespace kiali-operator kiali-operator

$ kubectl delete crd kialis.kiali.io

If you fail to delete the Kiali CRs before uninstalling the operator,

a proper cleanup may not be done.

Known problem: uninstall hangs (unable to delete the Kiali CR)

Typically this happens if not all Kiali CRs are deleted prior to uninstalling

the operator. To force deletion of a Kiali CR, you need to clear its finalizer.

For example:

$ kubectl patch kiali kiali -n istio-system -p '{"metadata":{"finalizers": []}}' --type=merge

This forces deletion of the Kiali CR and will skip uninstallation of

the Kiali Server. Remnants of the Kiali Server may still exist in your cluster

which you will need to manually remove.

Removing standalone Kiali

If you installed a standalone Kiali by using the kiali-server Helm chart, use

the standard helm uninstall commands. For example:

$ helm uninstall --namespace istio-system kiali-server

3 - Install via OperatorHub

Using OperatorHub to install the Kiali Operator.

Introduction

The OperatorHub is a website that contains a

catalog of Kubernetes Operators.

Its aim is to be the central location to find Operators.

The OperatorHub relies in the Operator Lifecycle Manager (OLM)

to install, manage and update Operators on any Kubernetes cluster.

The Kiali Operator is being published to the OperatorHub. So, you can use the

OLM to install and manage the Kiali Operator installation.

Installing the Kiali Operator using the OLM

Go to the Kiali Operator page in the OperatorHub: https://operatorhub.io/operator/kiali.

You will see an Install button at the right of the page. Press it and you

will be presented with the installation instructions. Follow these instructions

to install and manage the Kiali Operator installation using OLM.

Afterwards, you can create the Kiali CR to install Kiali.

Installing the Kiali Operator in OpenShift

The OperatorHub is bundled in the OpenShift console. To install the Kiali

Operator, simply go to the OperatorHub in the OpenShift console and search for

the Kiali Operator. Then, click on the Install button and follow the

instruction on the screen.

Afterwards, you can create the Kiali CR to install Kiali.

4 - The Kiali CR

Creating and updating the Kiali CR.

The Kiali Operator watches the Kiali Custom Resource (Kiali CR), a custom resource that contains the Kiali Server deployment configuration. Creating, updating, or removing a

Kiali CR will trigger the Kiali Operator to install, update, or remove Kiali.

If you want the operator to re-process the Kiali CR (called “reconciliation”) without having to change the Kiali CR’s spec fields, you can modify any annotation on the Kiali CR itself. This will trigger the operator to reconcile the current state of the cluster with the desired state defined in the Kiali CR, modifying cluster resources if necessary to get them into their desired state. Here is an example illustrating how you can modify an annotation on a Kiali CR:

$ kubectl annotate kiali my-kiali -n istio-system --overwrite kiali.io/reconcile="$(date)"

The Operator provides comprehensive defaults for all properties of the Kiali

CR. Hence, the minimal Kiali CR does not have a spec:

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

Assuming you saved the previous YAML to a file named my-kiali-cr.yaml, and that you are

installing Kiali in the same default namespace as Istio, create the resource with the following command:

$ kubectl apply -f my-kiali-cr.yaml -n istio-system

Often, but not always, Kiali is installed in the same namespace as Istio, thus the Kiali CR is also created in the Istio namespace.

Once created, the Kiali Operator should shortly be notified and will process the resource, performing the Kiali

installation. You can wait for the Kiali Operator to finish the reconcilation by using the standard kubectl wait

command and ask for it to wait for the Kiali CR to achieve the condition of Successful. For example:

kubectl wait --for=condition=Successful kiali kiali -n istio-system

You can check the installation progress by inspecting the status attribute of the created Kiali CR:

$ kubectl describe kiali kiali -n istio-system

Name: kiali

Namespace: istio-system

Labels: <none>

Annotations: <none>

API Version: kiali.io/v1alpha1

Kind: Kiali

(...some output is removed...)

Status:

Conditions:

Last Transition Time: 2021-09-15T17:17:40Z

Message: Running reconciliation

Reason: Running

Status: True

Type: Running

Deployment:

Instance Name: kiali

Namespace: istio-system

Environment:

Is Kubernetes: true

Kubernetes Version: 1.27.3

Operator Version: v1.89.0

Progress:

Duration: 0:00:16

Message: 5. Creating core resources

Spec Version: default

Events: <none>

Never manually edit resources created by the Kiali Operator; only edit the Kiali CR.

You may want to check the example install page to see some examples where the Kiali CR has a spec and to better

understand its structure. Most available attributes of the Kiali CR are

described in the pages of the Installation and

Configuration sections of the

documentation. For a complete list, see the Kiali CR Reference.

It is important to understand the

spec.deployment.cluster_wide_access setting in the CR. See the

Namespace Management page

for more information.

Once you created a Kiali CR, you can manage your Kiali installation by editing

the resource using the usual Kubernetes tools:

$ kubectl edit kiali kiali -n istio-system

To confirm your Kiali CR is valid, you can utilize the Kiali CR validation tool.

5 - The OSSMConsole CR

Creating and updating the OSSMConsole CR.

OpenShift ServiceMesh Console (aka OSSMC) provides a Kiali integration with the OpenShift Console; in other words it provides Kiali functionality within the context of the OpenShift Console. OSSMC is applicable only within OpenShift environments.

The main component of OSSMC is a plugin that gets installed inside the OpenShift Console. Prior to installing this plugin, you are required to have already installed the Kiali Operator and Kiali Server in your OpenShift environment. Please the Installation Guide for details.

There are no helm charts available to install OSSMC. You must utilize the Kiali Operator to install it. Installing the Kiali Operator on OpenShift is very easy due to the Operator Lifecycle Manager (OLM) functionality that comes with OpenShift out-of-box. Simply elect to install the Kiali Operator from the Red Hat or Community Catalog from the OperatorHub page in OpenShift Console.

The Kiali Operator watches the OSSMConsole Custom Resource (OSSMConsole CR), a custom resource that contains the OSSMC deployment configuration. Creating, updating, or removing a OSSMConsole CR will trigger the Kiali Operator to install, update, or remove OSSMC.

Never manually edit resources created by the Kiali Operator, only edit the OSSMConsole CR.

Creating the OSSMConsole CR to Install the OSSMC Plugin

With the Kiali Operator and Kial Server installed and running, you can install the OSSMC plugin in one of two ways - either via the OpenShift Console or via the “oc” CLI. Both methods are described below. You choose the method you want to use.

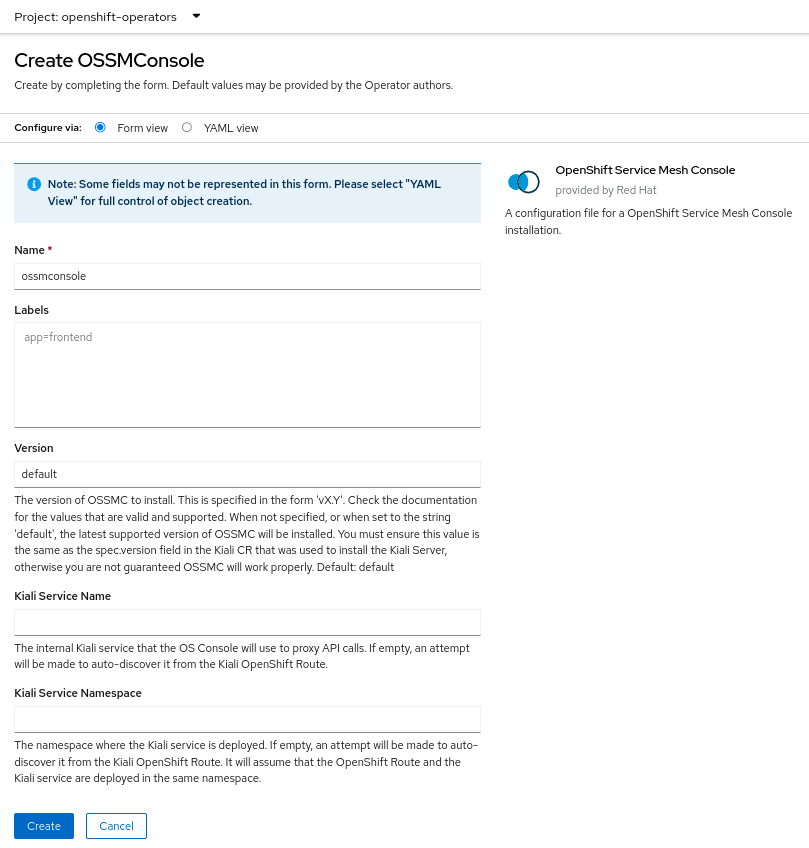

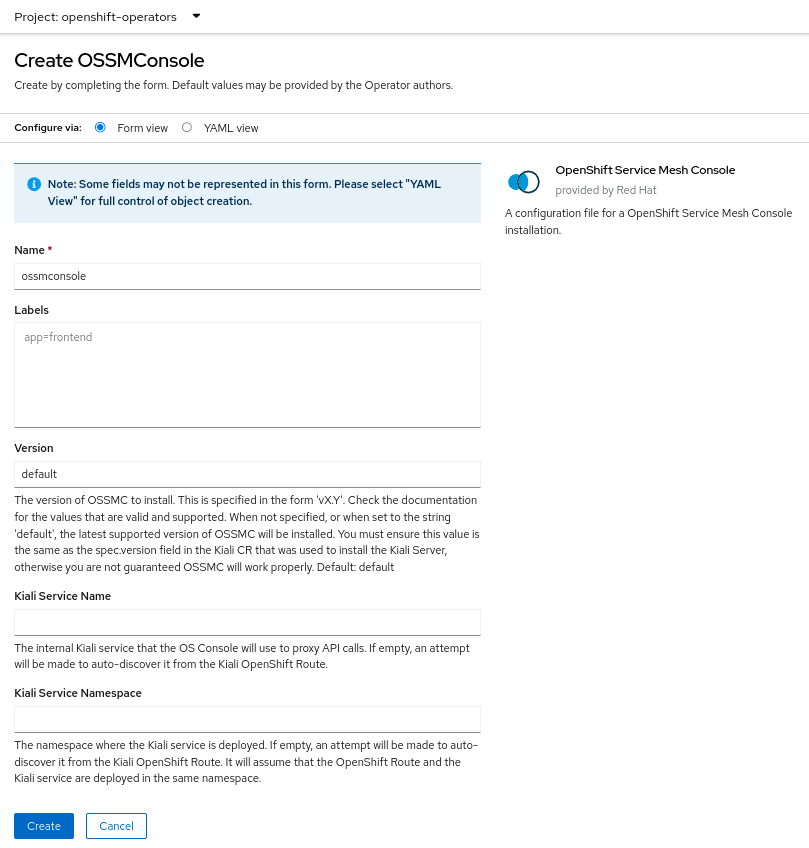

You should specify the

spec.version field of the OSSMConsole CR, and its value must be the same version as that of the Kiali Server (i.e. it must match the

spec.version of the Kiali Server’s Kiali CR). Normally, you can just set

spec.version to

default which tells the Kiali Operator to install OSSMC whose version is the same as that of the operator itself. Alternatively, you may specify one of the

supported versions in the format

vX.Y.

Installing via OpenShift Console

From the Kiali Operator details page in the OpenShift Console, create an instance of the “OpenShift Service Mesh Console” resource. Accept the defaults on the installation form and press “Create”.

Installing via “oc” CLI

To instruct the Kiali Operator to install the plugin, simply create a small OSSMConsole CR. A minimal CR can be created like this:

cat <<EOM | oc apply -f -

apiVersion: kiali.io/v1alpha1

kind: OSSMConsole

metadata:

namespace: openshift-operators

name: ossmconsole

spec:

version: default

EOM

Note that the operator will deploy the plugin resources in the same namespace where you create this OSSMConsole CR - in this case openshift-operators but you can create the CR in any namespace.

For a complete list of configuration options available within the OSSMConsole CR, see the OSSMConsole CR Reference.

To confirm your OSSMConsole CR is valid, you can utilize the OSSMConsole CR validation tool.

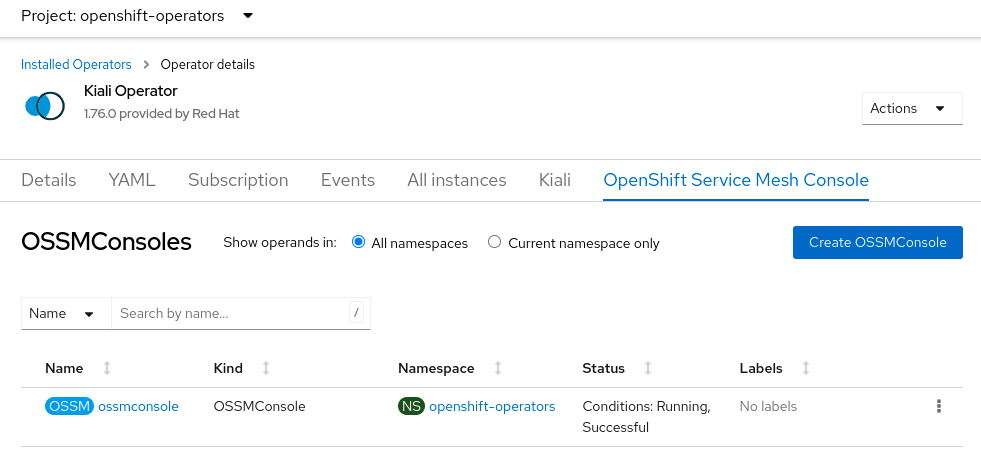

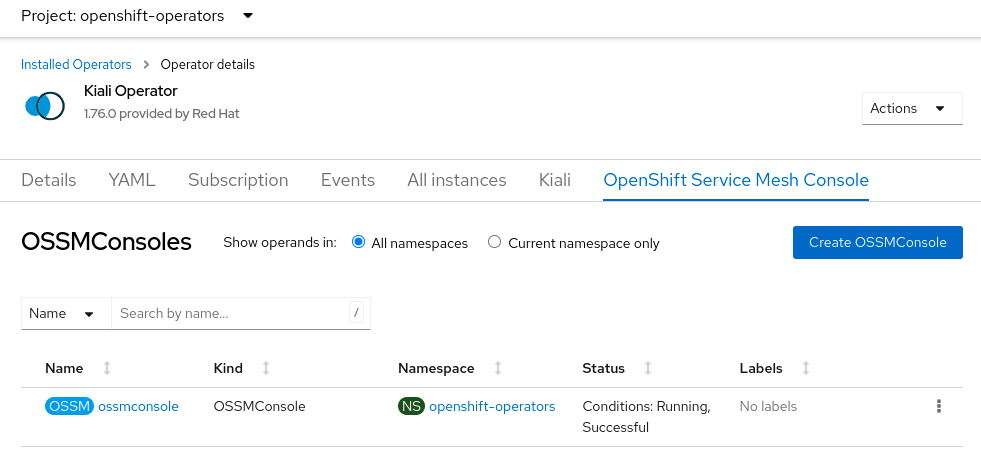

Installation Status

After the plugin is installed, you can see the “OSSMConsole” resource that was created in the OpenShift Console UI. Within the operator details page in the OpenShift Console UI, select the OpenShift Service Mesh Console tab to view the resource that was created and its status. The CR status field will provide you with any error messages should the deployment of OSSMC fail.

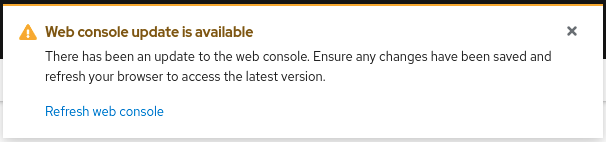

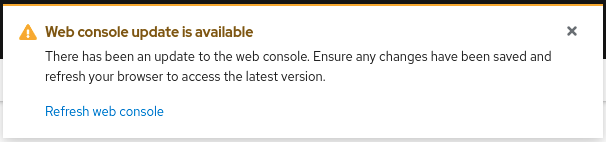

Once the operator has finished processing the OSSMConsole CR, you must then wait for the OpenShift Console to load and initialize the plugin. This may take a minute or two. You will know when the plugin is ready when the OpenShift Console pops up this message - when you see this message, refresh the browser window to reload the OpenShift Console:

Uninstalling OSSMC

This section will describe how to uninstall the OpenShift Service Mesh Console plugin. You can uninstall the plugin in one of two ways - either via the OpenShift Console or via the “oc” CLI. Both methods are described in the sections below. You choose the method you want to use.

If you intend to also uninstall the Kiali Operator, it is very important to first uninstall the OSSMConsole CR and then uninstall the operator. If you uninstall the operator before ensuring the OSSMConsole CR is deleted then you may have difficulty removing that CR and its namespace. If this occurs then you must manually remove the finalizer on the CR in order to delete it and its namespace. You can do this via: oc patch ossmconsoles <CR name> -n <CR namespace> -p '{"metadata":{"finalizers": []}}' --type=merge

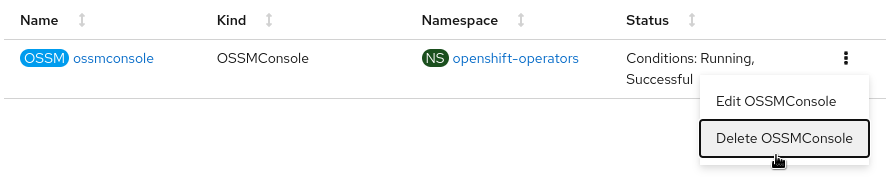

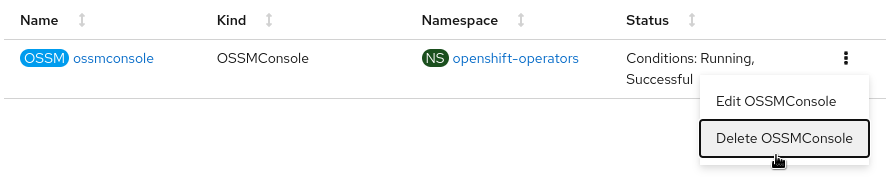

Uninstalling via OpenShift Console

Remove the OSSMConsole CR by navigating to the operator details page in the OpenShift Console UI. From the operator details page, select the OpenShift Service Mesh Console tab and then select the Delete option in the kebab menu.

Uninstalling via “oc” CLI

Remove the OSSMConsole CR via oc delete ossmconsoles <CR name> -n <CR namespace>. To make sure any and all CRs are deleted from any and all namespaces, you can run this command:

for r in $(oc get ossmconsoles --ignore-not-found=true --all-namespaces -o custom-columns=NS:.metadata.namespace,N:.metadata.name --no-headers | sed 's/ */:/g'); do oc delete ossmconsoles -n $(echo $r|cut -d: -f1) $(echo $r|cut -d: -f2); done

6 - Accessing Kiali

Accessing and exposing the Kiali UI.

Introduction

After Kiali is succesfully installed you will need to make Kiali accessible to users. This page describes some popular methods of exposing Kiali for use.

If exposing Kiali in a custom way, you may need to set some configurations

to make Kiali aware of how users will access Kiali.

The examples on this page assume that you followed the

Installation guide to install Kiali, and that you

installed Kiali in the

istio-system namespace.

Accessing Kiali using port forwarding

This method should work in any kind of Kubernetes cluster.

You can use port-forwarding to access Kiali by running any of these commands:

# If you have oc command line tool

oc port-forward svc/kiali 20001:20001 -n istio-system

# If you have kubectl command line tool

kubectl port-forward svc/kiali 20001:20001 -n istio-system

These commands will block. Access Kiali by visiting https://localhost:20001/ in

your preferred web browser.

Please note that this method exposes Kiali only to the local machine, no external users. You must

have the necessary privileges to perform port forwarding.

Accessing Kiali through an Ingress

You can configure Kiali to be installed with an

Ingress resource

defined, allowing you to access

the Kiali UI through the Ingress. By default, an Ingress will not be created. You can

enable a simple Ingress by setting spec.deployment.ingress.enabled to true in the Kiali

CR (a similar setting for the server Helm chart is available if you elect to install Kiali

via Helm as opposed to the Kiali Operator).

Exposing Kiali externally through this spec.deployment.ingress mechanism is a

convenient way of exposing Kiali externally but it will not necessarily work or

be the best way to do it because the way in which you should expose Kiali

externally will be highly dependent on your specific cluster environment and

how services are exposed generally for that environment.

When installing on an OpenShift cluster, an OpenShift Route will be installed (not an Ingress).

This Route will be installed by default unless you explicitly

disable it via spec.deployment.ingress.enabled: false. Note that the Route is required

if you configure Kiali to use the auth strategy of openshift (which is the default

auth strategy Kiali will use when installed on OpenShift).

The default Ingress that is created will be configured for a typical NGinx implementation. If you have your own

Ingress implementation you want to use, you can override the default configuration through

the settings spec.deployment.ingress.override_yaml and spec.deployment.ingress.class_name.

More details on customizing the Ingress can be found below.

The Ingress IP or domain name should then be used to access the Kiali UI. To find your Ingress IP or domain name, as per

the Kubernetes documentation,

try the following command (though this may not work if using Minikube without the ingress addon):

kubectl get ingress kiali -n istio-system -o jsonpath='{.status.loadBalancer.ingress[0].ip}'

If it doesn’t work, unfortunately, it depends on how and where you had setup

your cluster. There are several Ingress controllers available and some cloud

providers have their own controller or preferred exposure method. Check

the documentation of your cloud provider. You may need to customize the

pre-installed Ingress rule or expose Kiali using a different method.

Customizing the Ingress resource

The created Ingress resource will route traffic to Kiali regardless of the domain in the URL.

You may need a more specific Ingress resource that routes traffic

to Kiali only on a specific domain or path. To do this, you can specify route settings.

Alternatively, and for more advanced Ingress configurations, you can provide your own

Ingress declaration in the Kiali CR. For example:

When installing on an OpenShift cluster, the deployment.ingress.override_yaml will be applied

to the created Route. The deployment.ingress.class_name is ignored on OpenShift.

spec:

deployment:

ingress:

class_name: "nginx"

enabled: true

override_yaml:

metadata:

annotations:

nginx.ingress.kubernetes.io/secure-backends: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- http:

paths:

- path: /kiali

backend:

serviceName: kiali

servicePort: 20001

Accessing Kiali in Minikube

If you enabled the Ingress controller,

the default Ingress resource created by the installation (mentioned in the previous section) should be enough to access

Kiali. The following command should open Kiali in your default web browser:

xdg-open https://$(minikube ip)/kiali

Accessing Kiali through a LoadBalancer or a NodePort

By default, the Kiali service is created with the ClusterIP type. To use a

LoadBalancer or a NodePort, you can change the service type in the Kiali CR as

follows:

spec:

deployment:

service_type: LoadBalancer

Once the Kiali operator updates the installation, you should be able to use

the kubectl get svc -n istio-system kiali command to retrieve the external

address (or port) to access Kiali. For example, in the following output Kiali

is assigned the IP 192.168.49.201, which means that you can access Kiali by

visiting http://192.168.49.201:20001 in a browser:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kiali LoadBalancer 10.105.236.127 192.168.49.201 20001:31966/TCP,9090:30128/TCP 34d

If you are using the LoadBalancer service type to directly expose the Kiali

service, you may want to check the available options for the

HTTP Server and

Metrics server.

Accessing Kiali through an Istio Ingress Gateway

If you want to take advantage of Istio’s infrastructure, you can expose Kiali

using an Istio Ingress Gateway. The Istio documentation provides a

good guide explaining how to expose the sample add-ons.

Even if the Istio guide is focused on the sample add-ons, the steps are the same to expose a Kiali

installed using this Installation guide.

Accessing Kiali in OpenShift

By default, Kiali is exposed through a Route if installed on OpenShift. The following command

should open Kiali in your default web browser:

xdg-open https://$(oc get routes -n istio-system kiali -o jsonpath='{.spec.host}')/console

Specifying route settings

If you are using your own exposure method or if you are using one of

the methods mentioned in this page, you may need to configure the route that is

being used to access Kiali.

In the Kiali CR, route settings are broken in several attributes. For example,

to specify that Kiali is being accessed under the

https://apps.example.com:8080/dashboards/kiali URI, you would need to set the

following:

spec:

server:

web_fqdn: apps.example.com

web_port: 8080

web_root: /dashboards/kiali

web_schema: https

If you are letting the installation create an Ingress resource for you,

the Ingress will be adjusted to match these route settings.

If you are using your own exposure method, these spec.server settings are only making Kiali aware

of what its public endpoint is.

It is possible to omit these settings and Kiali may be able to discover some of

these configurations, depending on your exposure method. For example, if you

are exposing Kiali via LoadBalancer or NodePort service types, Kiali can

discover most of these settings. If you are using some kind of Ingress, Kiali

will honor X-Forwarded-Proto, X-Forwarded-Host and X-Forwarded-Port HTTP

headers if they are properly injected in the request.

The web_root receives special treatment, because this is the path where Kiali

will serve itself (both the user interface and its api). This is useful if you

are serving multiple applications under the same domain. It must begin with a

slash and trailing slashes must be omitted. The default value is /kiali for

Kubernetes and / for OpenShift.

Usually, these settings can be omitted. However, a few features require

that the Kiali’s public route be properly discoverable or that it is properly

configured; most notably, the

OpenID authentication.

7 - Advanced Install

Advanced installation options.

Canary upgrades

During a canary upgrade where multiple controlplanes are present, Kiali will automatically

detect both controlplanes. You can visit the mesh page

to visualize your controlplanes during a canary upgrade. You can also manually

specify a controlplane you want Kiali to connect to through the Kiali CR however

if you do this then you will need to update these settings during each canary upgrade.

spec:

external_services:

istio:

config_map_name: "istio"

istiod_deployment_name: "istiod"

istio_sidecar_injector_config_map_name: "istio-sidecar-injector"

Installing a Kiali Server of a different version than the Operator

When you install the Kiali Operator, it will be configured to install a Kiali

Server that is the same version as the operator itself. For example, if you

have Kiali Operator v1.34.0 installed, that operator will install Kiali Server

v1.34.0. If you upgrade (or downgrade) the Kiali Operator, the operator will in

turn upgrade (or downgrade) the Kiali Server.

There are certain use-cases in which you want the Kiali Operator to install a

Kiali Server whose version is different than the operator version. Read the

following section «Using a custom image registry» section to learn how to

configure this setup.

Using a custom image registry

Kiali is released and published to the Quay.io container image registry. There is a repository hosting the Kiali operator images and another one for the Kiali server images.

If you need to mirror the Kiali container images to some other registry, you still can use Helm to install the Kiali operator as follows:

$ helm install \

--namespace kiali-operator \

--create-namespace \

--set image.repo=your.custom.registry/owner/kiali-operator-repo

--set image.tag=your_custom_tag

--set allowAdHocKialiImage=true

kiali-operator \

kiali/kiali-operator

Notice the --set allowAdHocKialiImage=true which allows specifying a

custom image in the Kiali CR. For security reasons, this is disabled by

default.

Then, when creating the Kiali CR, use the following attributes:

spec:

deployment:

image_name: your.custom.registry/owner/kiali-server-repo

image_version: your_custom_tag

Change the default image

As explained earlier, when you install the Kiali Operator, it will be

configured to install a Kiali Server whose image will be pulled from quay.io

and whose version will be the same as the operator. You can ask the operator to

use a different image by setting spec.deployment.image_name and

spec.deployment.image_version within the Kiali CR (as explained above).

However, you may wish to alter this default behavior exhibited by the operator.

In other words, you may want the operator to install a different Kiali Server

image by default. For example, if you have an air-gapped environment with its

own image registry that contains its own copy of the Kiali Server image, you

will want the operator to install a Kiali Server that uses that image by

default, as opposed to quay.io/kiali/kiali. By configuring the operator to do

this, you will not force the authors of Kiali CRs to have to explicitly define

the spec.deployment.image_name setting and you will not need to enable the

allowAdHocKialiImage setting in the operator.

To change the default Kiali Server image installed by the operator, set the

environment variable RELATED_IMAGE_kiali_default in the Kiali Operator

deployment. The value of that environment variable must be the full image tag

in the form repoName/orgName/imageName:versionString (e.g.

my.internal.registry.io/mykiali/mykialiserver:v1.50.0). You can do this when

you install the operator via helm:

$ helm install \

--namespace kiali-operator \

--create-namespace \

--set "env[0].name=RELATED_IMAGE_kiali_default" \

--set "env[0].value=my.internal.registry.io/mykiali/mykialiserver:v1.50.0" \

kiali-operator \

kiali/kiali-operator

Development Install

This option installs the latest Kiali Operator and Kiali Server images which

are built from the master branches of Kiali GitHub repositories. This option is

good for demo and development installations.

helm install \

--set cr.create=true \

--set cr.namespace=istio-system \

--set cr.spec.deployment.image_version=latest \

--set image.tag=latest \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

8 - Example Install

Installing two Kiali servers via the Kiali Operator.

This is a quick example of installing Kiali. This example will install the operator and two Kiali Servers - one server will require the user to enter credentials at a login screen in order to obtain read-write access and the second server will allow anonymous read-only access.

For this example, assume there is a Minikube Kubernetes cluster running with an

Istio control plane installed in the namespace istio-system and

the Istio Bookinfo Demo installed in the namespace bookinfo:

$ kubectl get deployments.apps -n istio-system

NAME READY UP-TO-DATE AVAILABLE AGE

grafana 1/1 1 1 8h

istio-egressgateway 1/1 1 1 8h

istio-ingressgateway 1/1 1 1 8h

istiod 1/1 1 1 8h

jaeger 1/1 1 1 8h

prometheus 1/1 1 1 8h

$ kubectl get deployments.apps -n bookinfo

NAME READY UP-TO-DATE AVAILABLE AGE

details-v1 1/1 1 1 21m

productpage-v1 1/1 1 1 21m

ratings-v1 1/1 1 1 21m

reviews-v1 1/1 1 1 21m

reviews-v2 1/1 1 1 21m

reviews-v3 1/1 1 1 21m

Install Kiali Operator via Helm Chart

First, the Kiali Operator will be installed in the kiali-operator namespace using the operator helm chart:

$ helm repo add kiali https://kiali.org/helm-charts

$ helm repo update kiali

$ helm install \

--namespace kiali-operator \

--create-namespace \

kiali-operator \

kiali/kiali-operator

Install Kiali Server via Operator

Next, the first Kiali Server will be installed. This server will require the user to enter a Kubernetes token in order to log into the Kiali dashboard and will provide the user with read-write access. To do this, a Kiali CR will be created that looks like this (file: kiali-cr-token.yaml):

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

namespace: istio-system

spec:

auth:

strategy: "token"

deployment:

cluster_wide_access: false

discovery_selectors:

default:

- matchLabels:

kubernetes.io/metadata.name: bookinfo

view_only_mode: false

server:

web_root: "/kiali"

This Kiali CR will command the operator to deploy the Kiali Server in the same namespace where the Kiali CR is (istio-system). The operator will configure the server to: respond to requests to the web root path of /kiali, enable read-write access, use the authentication strategy of token, and be given access to the bookinfo namespace:

$ kubectl apply -f kiali-cr-token.yaml

Get the Status of the Installation

The status of a particular Kiali Server installation can be found by examining the status field of its corresponding Kiali CR. For example:

$ kubectl get kiali kiali -n istio-system -o jsonpath='{.status}'

When the installation has successfully completed, the status field will look something like this (when formatted):

$ kubectl get kiali kiali -n istio-system -o jsonpath='{.status}' | jq

{

"conditions": [

{

"ansibleResult": {

"changed": 21,

"completion": "2021-10-20T19:17:35.519131",

"failures": 0,

"ok": 102,

"skipped": 90

},

"lastTransitionTime": "2021-10-20T19:17:12Z",

"message": "Awaiting next reconciliation",

"reason": "Successful",

"status": "True",

"type": "Running"

}

],

"deployment": {

"discoverySelectorNamespaces": "bookinfo,istio-system",

"instanceName": "kiali",

"namespace": "istio-system"

},

"environment": {

"isKubernetes": true,

"kubernetesVersion": "1.28.0",

"operatorVersion": "v1.88.0"

},

"progress": {

"duration": "0:00:14",

"message": "7. Finished all resource creation"

}

}

Access the Kiali Server UI

The Kiali Server UI is accessed by pointing a browser to the Kiali Server endpoint and requesting the web root /kiali:

xdg-open http://$(minikube ip)/kiali

Because the auth.strategy was set to token, that URL will display the Kiali login screen that will require a Kubernetes token in order to authenticate with the server. For this example, you can use the token that belongs to the Kiali service account itself:

$ kubectl get secret -n istio-system $(kubectl get sa kiali-service-account -n istio-system -o jsonpath='{.secrets[0].name}') -o jsonpath='{.data.token}' | base64 -d

The output of that command above can be used to log into the Kiali login screen.

Install a Second Kiali Server

The second Kiali Server will next be installed. This server will not require the user to enter any login credentials but will only provide a view-only look at the service mesh. To do this, a Kiali CR will be created that looks like this (file: kiali-cr-anon.yaml):

apiVersion: kiali.io/v1alpha1

kind: Kiali

metadata:

name: kiali

namespace: kialianon

spec:

installation_tag: "Kiali - View Only"

istio_namespace: "istio-system"

auth:

strategy: "anonymous"

deployment:

cluster_wide_access: false

discovery_selectors:

default:

- matchLabels:

kubernetes.io/metadata.name: bookinfo

view_only_mode: true

instance_name: "kialianon"

server:

web_root: "/kialianon"

This Kiali CR will command the operator to deploy the Kiali Server in the same namespace where the Kiali CR is (kialianon). The operator will configure the server to: respond to requests to the web root path of /kialianon, disable read-write access, not require the user to authenticate, have a unique instance name of kialianon and be given access to the bookinfo namespace. The Kiali UI will also show a custom title in the browser tab so the user is aware they are looking at a “view only” Kiali dashboard. The unique deployment.instance_name is needed in order for this Kiali Server to be able to share access to the Bookinfo application with the first Kiali Server.

$ kubectl create namespace kialianon

$ kubectl apply -f kiali-cr-anon.yaml

The UI for this second Kiali Server is accessed by pointing a browser to the Kiali Server endpoint and requesting the web root /kialianon. Note that no credentials are required to gain access to this Kiali Server UI because auth.strategy was set to anonymous; however, the user will not be able to modify anything via the Kiali UI - it is strictly “view only”:

xdg-open http://$(minikube ip)/kialianon

A Kiali Server can be reconfigured by simply editing its Kiali CR. The Kiali Operator will perform all the necessary tasks to complete the reconfiguration and reboot the Kiali Server pod when necessary. For example, to change the web root for the Kiali Server:

$ kubectl patch kiali kiali -n istio-system --type merge --patch '{"spec":{"server":{"web_root":"/specialkiali"}}}'

The Kiali Operator will update the necessary resources (such as the Kiali ConfigMap) and will reboot the Kiali Server pod to pick up the new configuration.

Uninstall Kiali Server

To uninstall a Kiali Server installation, simply delete the Kiali CR. The Kiali Operator will then perform all the necessary tasks to remove all remnants of the associated Kiali Server.

kubectl delete kiali kiali -n istio-system

Uninstall Kiali Operator

To uninstall the Kiali Operator, use helm uninstall and then manually remove the Kiali CRD.

You must delete all Kiali CRs in the cluster prior to uninstalling the Kiali Operator. If you fail to do this, uninstalling the operator will hang and remnants of Kiali Server installations will remain in your cluster and you will be required to perform some

manual steps to clean it up.

$ kubectl delete kiali --all --all-namespaces

$ helm uninstall --namespace kiali-operator kiali-operator

$ kubectl delete crd kialis.kiali.io